People interact with recommendation algorithms daily, whether on social media, music streaming platforms, or shopping websites. Understanding how these interactions shape future recommendations is crucial for both users and platform designers. Researchers have long assumed that user behavior is a direct response to the content recommended. However, our new study, using Connect participants, challenges this assumption by demonstrating that users actively strategize to influence the recommendations they receive.

What is User Strategization? Understanding User Strategization in Recommendation Algorithms

User strategization refers to the intentional behavior changes that users make to manipulate the future recommendations they receive. For instance, a Spotify user might skip songs they like to avoid overloading their recommendations with similar tracks. In this study, conducted with colleagues at MIT and Columbia University and currently available as a preprint, we provide experimental evidence that users adapt their engagement based on their understanding of how recommendation algorithms work.

Study Design: Investigating User Strategization

To investigate this phenomenon, our research team designed a custom music player and conducted a lab experiment with 750 participants. We utilized Connect to recruit participants for our study, and within a few short hours, we had our sample, allowing us to begin data analysis almost immediately. The quality of the data collected through Connect was impressive—after excluding participants for technical and/or data quality issues, we were able to retain nearly 90% of the sample. This efficiency and reliability made Connect an invaluable tool for our research, enabling us to gather high-quality data quickly and cost-effectively.

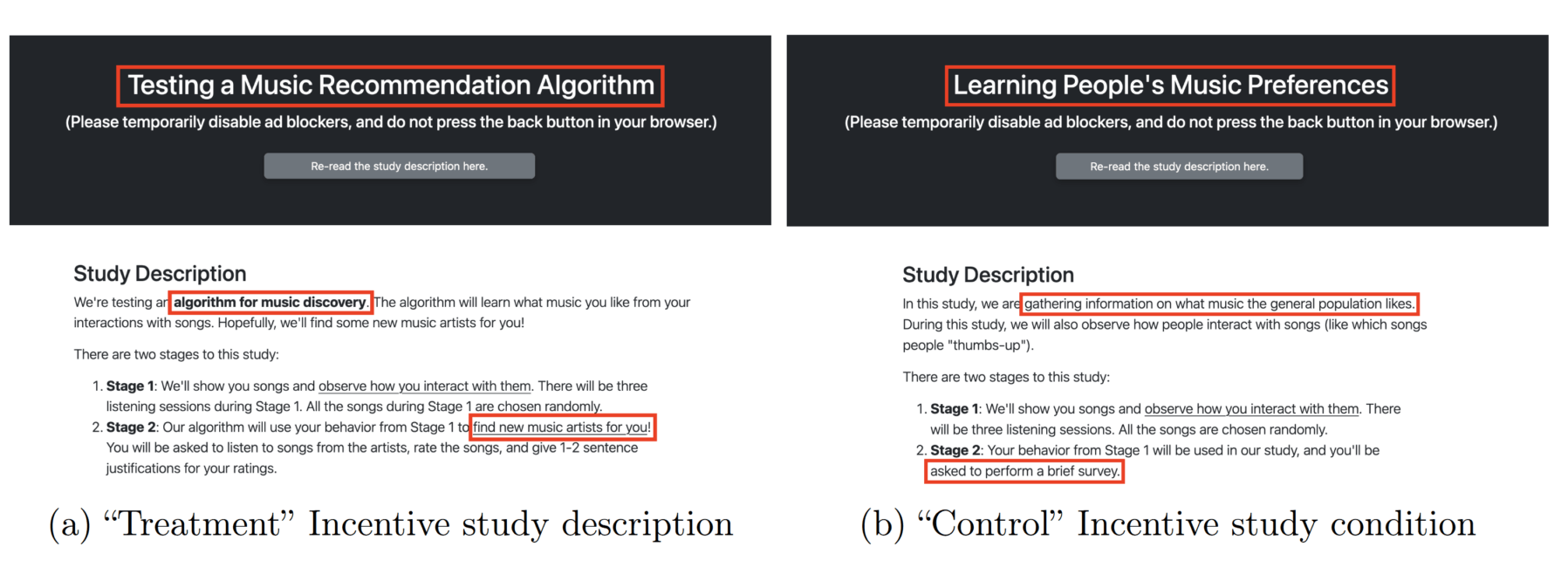

Our study tested two main hypotheses that support a theoretical model of user strategization first introduced by a different set of collaborators (in this preprint here). The first hypothesis posits that participants would change their behavior based on the type of feedback they believe the algorithm prioritizes. Participants were informed that the algorithm focuses on likes and dislikes, told that the platform prioritizes dwell time (how long they listened to a song), or given no information about the algorithm. The second hypothesis posits that participants who believe their behavior will affect future personalized recommendations will interact differently than those who believe their behavior only contributes to general preference data. To test this hypothesis, we told half of the participants that their behavior on the platform would be used to curate personal recommendations, and the other half that their behavior was being aggregated into general preference data. Importantly, other than a few very small wording changes (see below), the participants all saw the same interface and songs.

Key Findings

Our study found significant support for both hypotheses. In support of our first hypothesis, participants had significantly different behaviors depending on how they thought their preferences were being inferred. In support of our second hypothesis, participants who believed their actions would affect future recommendations adopted significantly different behaviors than those who thought their data would be aggregated. This means, for example, that people used engagement actions (such as likes and dislikes) more frequently when they believed they would receive personalized recommendations. These findings highlight that users are not passive consumers of content but active participants shaping their recommendation environment.

Importantly, we observed changes in behaviors that were not always mentioned in the instructions or text shown to users, indicating that our observed effects were not the result of demand effects, where users unintentionally follow behavioral cues, e.g., where users (sometimes subconsciously) behave the way they believe the study designers want them to behave.

Insights from the Post-Experiment Survey

To complement our experimental data, we conducted a post-experiment survey to gain deeper insights into participants’ strategic behavior. The survey revealed that a significant portion of users consciously adjusted their actions to influence recommendations. Many participants reported they often strategize on real-world platforms like Spotify and TikTok, intentionally modifying their engagement to shape their content feed. This qualitative data reinforced our experimental findings, showing that user strategization is both widespread and often deliberate.

Implications for Platforms: Understanding User Strategization

Platforms relying on user behavior data must consider the strategic nature of these interactions. Algorithms trained on data influenced by user strategization may not accurately reflect true user preferences. This misalignment can lead to suboptimal recommendations and user dissatisfaction. Our study suggests that platforms need to account for user strategization to improve the accuracy and effectiveness of their recommendation systems.