How to Conduct Better Research Studies with the MTurk Toolkit

By Josef Edelman, MS, Cheskie Rosenzweig, MS, & Aaron Moss, PhD

Amazon’s Mechanical Turk (MTurk) is a microtask platform that was launched in 2005 to help computer scientists and tech companies solve problems that do not have computer-based solutions (Pontin, 2007). Yet, as MTurk grew, behavioral researchers realized they could use it to access tens of thousands of people from across the world. In recent years, MTurk has become an indispensable tool for social science research. However, because MTurk was not designed with social scientists in mind, many of the tasks researchers need to accomplish when setting up and managing online studies are difficult or impossible.

This is where the researchers at CloudResearch (formerly known as TurkPrime) seized an opportunity. As regular users of MTurk, the CloudResearch team experienced the constraints and limitations that made setting up and managing MTurk studies difficult. In response, we created our MTurk Toolkit, which was specifically designed to simplify and improve participant recruitment on MTurk.

The MTurk Toolkit has a variety of features that save researchers time and money, improve data quality, and empower researchers to conduct studies that were previously difficult or impossible to carry out with MTurk alone (Litman, Robinson, & Abberbock, 2016). Below are some of the ways the Toolkit helps researchers run better studies on MTurk.

5 Ways the Toolkit Helps Researchers Run Better Studies on MTurk

Setting Up and Monitoring Studies with Ease

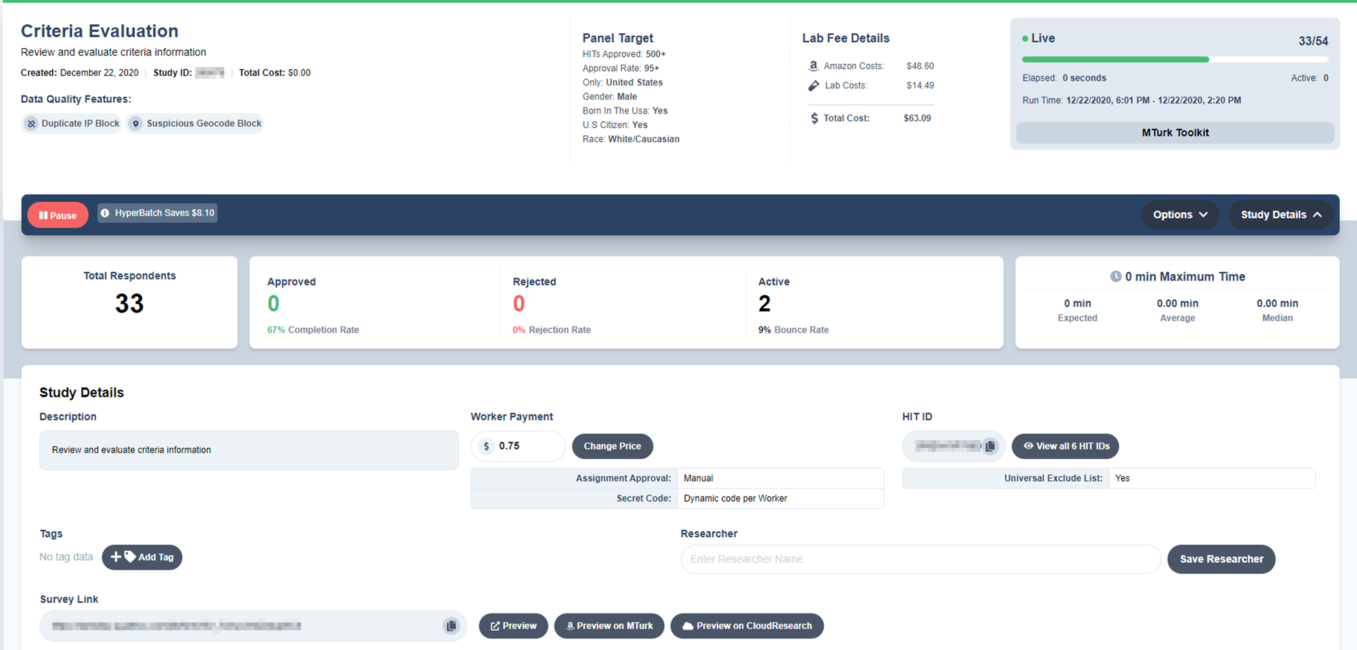

Setting up studies on MTurk can be challenging for social scientists for several reasons. First, MTurk contains lots of programming jargon behavioral scientists are unlikely to be familiar with. Second, the MTurk dashboard is relatively static and gives researchers few options for monitoring or managing a study once it is live. Finally, the process of specifying which workers are eligible for a study (i.e., setting up inclusion and exclusion lists) is cumbersome, requiring the researcher to manage multiple CSV files. The CloudResearch Toolkit simplifies all of these issues with a variety of features including:

- Feasibility Calculator

Some groups of participants cannot be recruited on MTurk. The CloudResearch Toolkit includes a feasibility calculator that estimates both the cost and likelihood of reaching your desired sample.

- Study Batching

Mechanical Turk charges a 40% fee for studies with more than nine respondents and 20% for studies with less than nine respondents. Using the CloudResearch Toolkit, we can “batch” your study into several groups of nine or less to ensure you pay just a 20% fee. Batching saves you money.

- Inclusion and Exclusion Lists

Specifying which workers are eligible or ineligible for your study is easy with inclusion and exclusion lists. Setting these criteria is as simple as selecting from a list of past studies or copying and pasting Worker IDs.

- Dashboard Indicators

Once a study is live, you can monitor its progress on the Dashboard. The Dashboard includes data on the bounce rate, completion rate, and average completion time. If a problem occurs with your study, you can pause and edit the HIT directly on the Dashboard.

- Scheduling a Launch Time

The Toolkit makes it possible to schedule a launch time for your study. When the scheduled time arrives, your study will automatically go live. This feature is useful for longitudinal studies and controlling time of day biases that may affect a study.

Selective Recruitment of Respondents

MTurk allows researchers to selectively sample participants with some basic demographic criteria. CloudResearch’s MTurk Toolkit offers over 50-panel criteria researchers can use to target specific participants. Furthermore, if you don’t see a qualification that you are interested in, you can request it. CloudResearch will screen tens of thousands of Mechanical Turk workers within days and create a custom qualification for you.

- Panel Options

Selectively sample people based on a wide variety of characteristics including views on climate change, whether they have purchased a car in the last 3 months, time spent playing video games, how often they drink coffee, whether they have had surgery or general anesthesia in the last 6 months, status as a pet owner, and much more.

- Target Workers by US Region or State

CloudResearch’s MTurk Toolkit allows you to sample people in specific regions of the country or by specific states.

Streamline Managing Workers

At the core of the Toolkit are several features that enhance researchers’ ability to manage MTurk workers. For example, researchers frequently need to contact workers, bonus workers, or create compensation HITs. The Toolkit streamlines these actions, saving time and effort.

- Emailing Workers

Researchers can email workers to let them know they are eligible for a study using the Email Included Workers feature. This feature is crucial when running longitudinal studies.

- Bonus Workers

Researchers may want to issue bonuses for a variety of reasons. With the Toolkit, issuing bonuses is fast and easy. Researchers can set up their study to automatically bonus all workers as they are approved or manually award bonuses in batches.

- Compensation HITs

Sometimes situations arise in which workers are unable to receive payment despite having completed a survey. The Compensation HIT feature allows researchers to quickly compensate these workers by having them simply accept and submit a compensation HIT to receive payment.

- Automatic Approval

To save time, researchers can automate worker approval. When using this feature, all workers who enter the correct approval code will be automatically approved as soon as they submit their work.

Longitudinal Studies Simplified

Longitudinal studies present researchers with several obstacles to overcome to ensure study success. Some of these include: contacting an initial group of participants, maintaining contact information, re-contacting participants, incentivizing participants to return for follow up sessions, and managing data across multiple time points. CloudResearch’s MTurk Toolkit has components that attenuate the more daunting aspects of longitudinal research.

- Including Workers

Workers can be included in a HIT by specifying previous studies workers must have completed, entering Worker IDs onto a list, or creating a Worker Group. Including workers when running a longitudinal study ensures that only people who participated in Time 1 are able to participate in Time 2.

- Notifying Worker

Once you place workers onto an Include list, you can easily notify them when successive waves of data collection launch with our Email Workers feature.

This feature ensures that workers in multiple studies that are live at the same time are unique to each other.

- Embedding Worker IDs

Matching participant responses across multiple waves of data collection requires some identifier or unique ID. CloudResearch makes it possible to automatically embed each participant’s Worker ID into your data file as they enter your survey. This ensures you will be able to match participants across all waves of data collection.

Improving Data Quality

Despite the presence of many high-quality participants on MTurk, research has revealed that there are a number of inattentive and fraudulent workers who sometimes get into research studies. Workers may not only sometimes speed through studies inattentively, but they also may lie about who they are to gain access to a study, try to access a study multiple times, or pose a threat to data because they are highly experienced with the measures and manipulations commonly used in studies. CloudResearch’s MTurk Toolkit has multiple features that address these concerns. Many of these features were built out using technological solutions. Some features are additionally based on a massive ongoing screening project in which the population of all participants on MTurk has been vetted for data quality.

How the MTurk Toolkit Improves Data Quality:

- Block Low-Quality Participants

If you choose to Block Low-Quality Participants, people who have failed CloudResearch’s ongoing screening will be unable to access your study. This setting is the default option.

- CloudResearch-Approved Participants

If you select CloudResearch-Approved Participants, your study will only be open to people who have been vetted by CloudResearch. Participants on this list have shown prior evidence of attention and engagement and represent various levels of experience on MTurk, different ages, races, incomes, and are generally demographically similar to other MTurk workers.

- Block Responses from Duplicate IP Addresses

This feature prevents workers from the same IP address from accessing the same study, limiting concerns about workers sharing study information or accessing the same study with multiple accounts.

- Block Suspicious Geolocations

This feature blocks submissions from a list of suspicious geolocations. Suspicious geolocations are often servers that people outside of the US use to gain access to HITs meant only for people within the US. Using this feature can dramatically improve data quality.

- Verify Worker Location

The Verify Worker Country and State Location feature prevent any worker whose IP address does not match the country and location settings of the study. This feature restricts workers who are not part of the intended sample from taking the survey.

- Naivete

The naivete feature allows researchers to target workers who are unfamiliar with measures, manipulations, and experimental tasks common in the social and behavioral sciences. This feature is one way to temper the influence of MTurk “superworkers” who are responsible for taking over 40% of HITs despite making up less than 6% of the MTurk worker pool (Robinson, Rosenzweig, Moss, Litman, 2019).

Always Developing Faster and More Accurate Online Research

Since CloudResearch was introduced in 2016, more than 6,000 labs and researchers have used our MTurk Toolkit to run studies on MTurk. This rapid growth is a testament to the Toolkit’s ability to simplify research on Mechanical Turk.

And, now CloudResearch has expanded beyond Mechanical Turk with Prime Panels, a platform that offers a more diverse and nationally representative sample than MTurk. Prime Panels provides access to more than 50 million participants worldwide. The size of this platform allows researchers to collect high-quality data with targeted precision. To learn more, visit https://www.cloudresearch.com/products/prime-panels/.

At CloudResearch, we are always developing new ways to make online research faster, more affordable, and more accurate. We strive to be the most dynamic and effective participant-sourcing platform on the market.